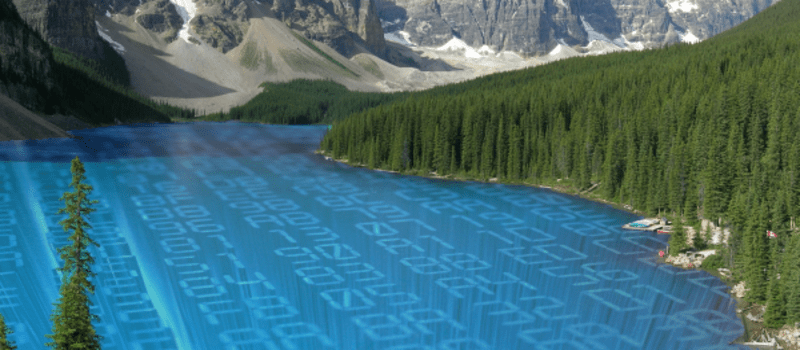

In the ever-evolving landscape of data management, the concept of a data lake has emerged as a crucial element in harnessing the power of big data. As organizations grapple with an unprecedented influx of data from various sources, the data lake has become a reservoir for storing, managing, and analyzing vast amounts of structured and unstructured data. This article aims to demystify the concept of a data lake, exploring its definition, key features, benefits, and challenges, providing readers with a comprehensive understanding of this transformative technology.

Definition and Characteristics of Data Lakes

A data lake is a centralized repository that allows organizations to store vast volumes of both structured and unstructured data at any scale. Unlike traditional data storage solutions, such as relational databases or data warehouses, data lakes embrace a schema-on-read approach, meaning that data can be ingested without a predefined structure. This flexibility enables organizations to efficiently capture and retain diverse data types, including raw and unprocessed information. Data lakes often leverage distributed storage systems and parallel processing frameworks to handle the massive scale of data, making them highly scalable. While traditional data storage solutions impose a structure on data before it is stored (schema-on-write), data lakes defer the structuring until the data is analyzed, offering a more agile and cost-effective solution for managing the exponential growth of data in today’s digital landscape. The role of a data lake is not just storage; it serves as a comprehensive platform for data exploration, analytics, and extracting valuable insights, providing a foundation for advanced data processing and machine learning applications.

Architecture and Components

A typical data lake architecture comprises several interconnected components designed to efficiently manage and derive insights from vast and varied datasets.

- Data Ingestion Layer: Manages the seamless flow of raw data into the data lake, capturing diverse data types from various sources.

- Storage Layer: Stores data in its raw and unstructured form, utilizing distributed file systems or object storage to accommodate large-scale datasets.

- Metadata Store: Maintains metadata information that provides context, structure, and lineage for the stored data, facilitating efficient data discovery and governance.

- Data Processing Layer: Processes and transforms raw data into usable formats, employing distributed computing frameworks for scalable data processing.

- Data Catalog: Indexes and organizes the metadata, enabling users to discover, understand, and access the available data assets within the data lake.

- Security and Access Control: Implements robust security measures, including encryption and access controls, to ensure data privacy and compliance with regulatory requirements.

- Data Governance Framework: Defines policies and procedures for data quality, lineage, and compliance, ensuring the reliability and trustworthiness of data within the data lake.

- Query and Analytics Engines: Provides tools and interfaces for users to run queries, perform analytics, and derive meaningful insights from the stored data.

- Integration with Data Warehouses: Facilitates seamless integration with traditional data warehouses, allowing organizations to leverage both technologies in a complementary manner.

- Monitoring and Management Tools: Monitors the performance and health of the data lake, offering management tools for troubleshooting, optimization, and resource allocation.

Data Lake vs Data Warehouse

A data lake and a data warehouse are distinct yet complementary components in modern data architecture. A data lake, characterized by its schema-on-read approach, is a centralized repository that accommodates raw, unstructured, and diverse data at scale. It emphasizes flexibility, allowing data to be ingested without predefined structures. In contrast, a data warehouse follows a schema-on-write model, where data is organized into a structured format before storage. It is optimized for complex querying and analysis, catering to structured and processed data.

The complementarity between a data lake and a data warehouse arises from their respective strengths. A data lake serves as an expansive reservoir for storing large volumes of raw data, fostering agility and scalability. It accommodates data exploration and experimentation, providing a foundation for machine learning and advanced analytics. On the other hand, a data warehouse excels in structured data analytics, offering fast query performance and facilitating business intelligence reporting. Integrating these two architectures allows organizations to capitalize on the strengths of each: the data lake for flexible storage and exploration, and the data warehouse for optimized querying and analysis. This synergy creates a comprehensive ecosystem that addresses a wide spectrum of data needs, enabling organizations to extract valuable insights and make informed decisions across various data types and use cases.

Benefits of Implementing a Data Lake

Implementing a data lake provides organizations with a myriad of benefits that are instrumental in navigating the complexities of modern data management. One key advantage is scalability, as data lakes can seamlessly scale to accommodate vast amounts of both structured and unstructured data, allowing businesses to handle the ever-expanding data landscape. The flexibility inherent in a data lake’s schema-on-read approach enables the storage of raw data without predefined structures, promoting agility in data exploration and experimentation. This flexibility is particularly beneficial in a rapidly evolving technological landscape where new data sources and formats continually emerge. Additionally, data lakes promote cost-effectiveness by leveraging scalable and distributed storage systems, mitigating the need for upfront data structuring and reducing storage costs. Furthermore, the centralized nature of a data lake fosters collaboration across teams and departments, encouraging the sharing of diverse datasets and facilitating a more holistic approach to data-driven decision-making. Overall, a well-implemented data lake empowers organizations to harness the full potential of their data assets, driving innovation, and gaining a competitive edge in the dynamic business environment.

Challenges and Best Practices

Data lake projects come with their set of challenges and best practices essential for ensuring success. One major challenge involves data governance and security. With the vast influx of diverse data, maintaining proper control, access, and ensuring compliance with regulations become critical. A robust governance framework, including well-defined policies for data quality, lineage, and access controls, is essential. Another challenge is the potential for a data lake to become a “data swamp” due to inadequate metadata management, making it challenging for users to find relevant information. Implementing a comprehensive data catalog with effective metadata organization is a best practice to address this issue.

Moreover, the sheer volume and variety of data in a data lake can lead to performance issues during data processing and analysis. Best practices involve optimizing the data lake architecture, employing appropriate indexing mechanisms, and utilizing efficient processing frameworks to ensure timely and efficient data retrieval. Additionally, organizations must prioritize data literacy initiatives to ensure that users across the organization can derive meaningful insights from the data lake. Regular monitoring and auditing of the data lake infrastructure are crucial best practices to identify and address issues promptly, ensuring the sustained reliability and performance of the data lake over time. In navigating these challenges and adhering to best practices, organizations can unlock the full potential of their data lake investments.

Conclusion

In conclusion, a data lake serves as a strategic asset for organizations navigating the complex data landscape. By providing a unified platform for diverse data types and enabling advanced analytics, data lakes empower businesses to make informed decisions in real-time. As technology continues to advance, understanding the intricacies of data lakes becomes essential for organizations seeking to harness the full potential of their data assets. Embracing this innovative approach to data management opens the door to unprecedented opportunities for insights and innovation in the digital age.